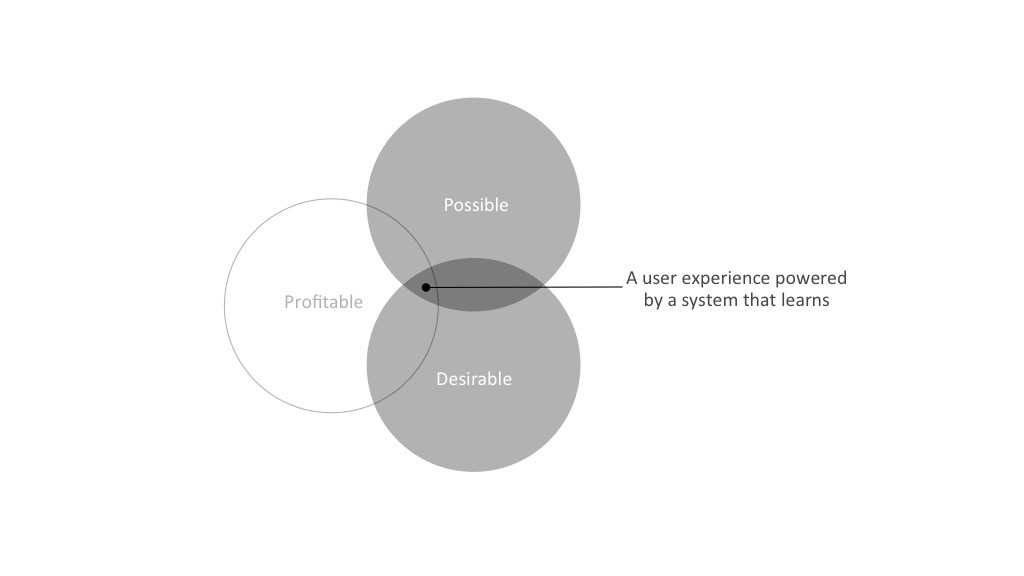

I find myself going back to Fabien Girardin’s excellent article, Experience Design in the Machine Learning Era, and mining it for more gold. Fabien shares:

“Nowadays, the design of many digital services does not only rely on data manipulation and information design but also on systems that learn from their users. If you would open the hood of these systems, you would see that behavioral data (e.g. human interactions, transactions with systems) is fed as context to algorithms that generates knowledge. An interface communicates that knowledge to enrich an experience. Ideally, that experience seeks explicit user actions or implicit sensor events to create a feedback loop that will feed the algorithm with learning material.”

These feedback loop mechanisms typically offer ways to personalize, optimize or automate existing services. They also create opportunities to design new experiences based on recommendations, predictions or contextualization. Fabien shares these design principles:

Design for Discovery

We have seen that recommender systems help discover the known unknown or even the unknown unknowns. For instance, Spotify helps discover music through a personalized experience defined on the match between an individual listening behavior and the listening behavior of hundreds of thousands of other individuals. That type of experience has at least three major design challenges.

Recommender systems have a tendency to create a “filter bubble” that limits suggestions (e.g. products, restaurants, news items, people to connect with) to a world that is strictly linked to a profile built on past behaviors. In response, data scientists must sometimes tweak their algorithms to be less accurate and add a dose of randomness to the suggestions.

It is also good design practice to let users to update their preferences – to “reshape” aspects of their profile that influence the discovery. Fabien call this “profile detox”. Amazon, for example, allows users to remove items that might negatively influence the recommendations like gifts they purchase for others.

Organizations that rely on subjective recommendation like Spotify now enlist humans to give more subjectivity and diversity to the suggested music. This approach of using humans to clean datasets or mitigate the limitations of machine learning algorithm is commonly called “Human Computation” or “Interactive Machine Learning” or “Relevance Feedback”.

Design for Decision Making

Data and algorithms provide means to personalize decision making. For example, consider the average bank account balance and savings behavior. We can personalize investment opportunities according to each customer’s capacity to save money.

In the case of financial advisory, a customer could operate multiple accounts with other banks preventing a clear view of their overall saving behavior. A good design practice is to let users tell implicitly or explicitly about poor information. It is the data scientist’s responsibility to express the types of feedback that enrich their models and the designer’s job to find ways to make it part of the experience.

Design for Uncertainty

Traditionally the design of computer programs follows a binary logic with an explicit finite set of concrete and predictable states translated into a workflow. Machine learning algorithms change this with their inherent fuzzy logic. They are designed to look for patterns within a set of sample behaviors to probabilistically approximate the rules of these behaviors. This approach comes with a certain degree imprecision and unpredictable behaviors. They often return some information on the precision of the information given.

For example, the booking platform Kayak predicts the evolution of prices according to the analysis of historical prices changes. Its “fare-casting” algorithm is designed to return confidence on whether it is a favorable moment to purchase a ticket. A data scientist is naturally inclined to measure how accurately the algorithm predicts a value: “We predict this fare will be x”. That ‘prediction’ is in fact an information based on historical trends. Yet predicting is not the same as informing and a designer must consider how well such a prediction could support a user action: “Buy! this fare is likely to increase”. The ‘likely’ with an overview of the price trend is an example of a “beautiful seam” in the user experience.

Design for Engagement

Today, what we read online is based on our own behaviors and the behaviors of other users. Algorithms typically score the relevance of social and news content. The aim of these algorithms is to promote content for higher engagement or send notifications to create habits.

These actions taken on our behalf are not necessarily for our own interest. Major online services are fighting to grab our attention. Their business is to keep people active as long and frequently as possible on their platforms. This leads to the development of “sticky” – and sometimes needy – experiences that may play with our emotions like Fear of Missing Out (FoMO) or other obsessions to “dope” the user engagement.

Our mobile phones are a good example with notifications, alerts, messages, retweets, likes, that some people check on an average 150 times per day or more. Today designer can use data and algorithms to exploit people emotions and behavior. This new power raises the need for new design ethics in the age of machine learning.

Design for Time Well Spent

There are opportunities to design a radically different experience than engagement. An organization like a bank has the advantage of being a business that runs on data and does not need customers to spend the maximum amount of time with their services. Tristan Harris Time Well Spent movement is particularly inspiring in that sense. He promotes the type of experience that use data to be super-relevant or be silent. The type of technology to protect the user focus and to be respectful of people’s time. The Twitter “While you were away…” is a compelling example of that practice. Other services are good at suggesting moments to engage with them. Instead of measuring user retention, that type of experience focuses on how relevant the interactions are.

Design for Peace of Mind

Data scientists are good in detecting normal behavior and abnormal situations. Generation of machine learning brings new powers to society, but also increases the responsibility of their creators. Algorithmic bias exists and may be inherent to the data sources. In consequence, there is a need to make algorithms more legible for people and audit-able by regulators to understand their implications. This means knowledge that an algorithm produces should safeguard the interest of their users and the results of the evaluation and the criteria used should be explained.